NameNodes have scalability limits because of the metadata overhead comprised of inodes (files and directories) and file blocks, the number of Datanode heartbeats, and the number of HDFS RPC client requests. The common solution is to split the filesystem into smaller subclusters HDFS Federation and provide a federated view ViewFs. The problem is how to maintain the split of the subclusters (e.g., namespace partition), which forces users to connect to multiple subclusters and manage the allocation of folders/files to them.

A natural extension to this partitioned federation is to add a layer of software responsible for federating the namespaces. This extra layer allows users to access any subcluster transparently, lets subclusters manage their own block pools independently, and supports rebalancing of data across subclusters. To accomplish these goals, the federation layer directs block accesses to the proper subcluster, maintains the state of the namespaces, and provides mechanisms for data rebalancing. This layer must be scalable, highly available, and fault tolerant.

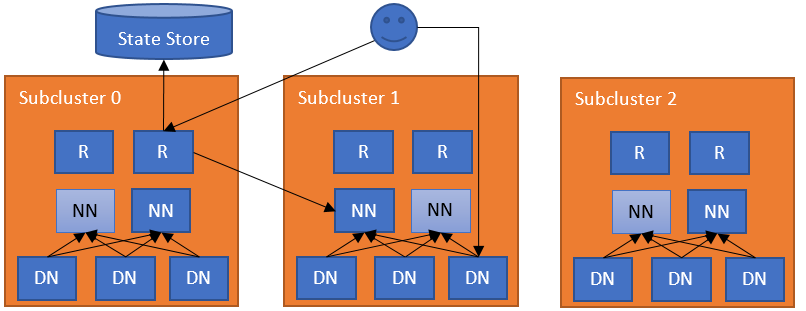

This federation layer comprises multiple components. The Router component that has the same interface as a NameNode, and forwards the client requests to the correct subcluster, based on ground-truth information from a State Store. The State Store combines a remote Mount Table (in the flavor of ViewFs, but shared between clients) and utilization (load/capacity) information about the subclusters. This approach has the same architecture as YARN federation.

The simplest configuration deploys a Router on each NameNode machine. The Router monitors the local NameNode and heartbeats the state to the State Store. When a regular DFS client contacts any of the Routers to access a file in the federated filesystem, the Router checks the Mount Table in the State Store (i.e., the local cache) to find out which subcluster contains the file. Then it checks the Membership table in the State Store (i.e., the local cache) for the NameNode responsible for the subcluster. After it has identified the correct NameNode, the Router proxies the request. The client accesses Datanodes directly.

There can be multiple Routers in the system with soft state. Each Router has two roles:

The Router receives a client request, checks the State Store for the correct subcluster, and forwards the request to the active NameNode of that subcluster. The reply from the NameNode then flows in the opposite direction. The Routers are stateless and can be behind a load balancer. For performance, the Router also caches remote mount table entries and the state of the subclusters. To make sure that changes have been propagated to all Routers, each Router heartbeats its state to the State Store.

The communications between the Routers and the State Store are cached (with timed expiration for freshness). This improves the performance of the system.

For this role, the Router periodically checks the state of a NameNode (usually on the same server) and reports their high availability (HA) state and load/space status to the State Store. Note that this is an optional role, as a Router can be independent of any subcluster. For performance with NameNode HA, the Router uses the high availability state information in the State Store to forward the request to the NameNode that is most likely to be active. Note that this service can be embedded into the NameNode itself to simplify the operation.

The Router operates with failures at multiple levels.

Federated interface HA: The Routers are stateless and metadata operations are atomic at the NameNodes. If a Router becomes unavailable, any Router can take over for it. The clients configure their DFS HA client (e.g., ConfiguredFailoverProvider or RequestHedgingProxyProvider) with all the Routers in the federation as endpoints.

NameNode heartbeat HA: For high availability and flexibility, multiple Routers can monitor the same NameNode and heartbeat the information to the State Store. This increases clients’ resiliency to stale information, should a Router fail. Conflicting NameNode information in the State Store is resolved by each Router via a quorum.

Unavailable NameNodes: If a Router cannot contact the active NameNode, then it will try the other NameNodes in the subcluster. It will first try those reported as standby and then the unavailable ones. If the Router cannot reach any NameNode, then it throws an exception.

Expired NameNodes: If a NameNode heartbeat has not been recorded in the State Store for a multiple of the heartbeat interval, the monitoring Router will record that the NameNode has expired and no Routers will attempt to access it. If an updated heartbeat is subsequently recorded for the NameNode, the monitoring Router will restore the NameNode from the expired state.

To interact with the users and the administrators, the Router exposes multiple interfaces.

RPC: The Router RPC implements the most common interfaces clients use to interact with HDFS. The current implementation has been tested using analytics workloads written in plain MapReduce, Spark, and Hive (on Tez, Spark, and MapReduce). Advanced functions like snapshot, encryption and tiered storage are left for future versions. All unimplemented functions will throw exceptions.

Admin: Administrators can query information from clusters and add/remove entries from the mount table over RPC. This interface is also exposed through the command line to get and modify information from the federation.

Web UI: The Router exposes a Web UI visualizing the state of the federation, mimicking the current NameNode UI. It displays information about the mount table, membership information about each subcluster, and the status of the Routers.

WebHDFS: The Router provides the HDFS REST interface (WebHDFS) in addition to the RPC one.

JMX: It exposes metrics through JMX mimicking the NameNode. This is used by the Web UI to get the cluster status.

Some operations are not available in Router-based federation. The Router throws exceptions for those. Examples users may encounter include the following.

The (logically centralized, but physically distributed) State Store maintains:

The backend of the State Store is pluggable. We leverage the fault tolerance of the backend implementations. The main information stored in the State Store and its implementation:

Membership: The membership information encodes the state of the NameNodes in the federation. This includes information about the subcluster, such as storage capacity and the number of nodes. The Router periodically heartbeats this information about one or more NameNodes. Given that multiple Routers can monitor a single NameNode, the heartbeat from every Router is stored. The Routers apply a quorum of the data when querying this information from the State Store. The Routers discard the entries older than a certain threshold (e.g., ten Router heartbeat periods).

Mount Table: This table hosts the mapping between folders and subclusters. It is similar to the mount table in ViewFs where it specifies the federated folder, the destination subcluster and the path in that folder.

By default, the Router is ready to take requests and monitor the NameNode in the local machine. It needs to know the State Store endpoint by setting dfs.federation.router.store.driver.class. The rest of the options are documented in hdfs-default.xml.

Once the Router is configured, it can be started:

[hdfs]$ $HADOOP_PREFIX/sbin/hadoop-daemon.sh --script $HADOOP_PREFIX/bin/hdfs start dfsrouter

And to stop it:

[hdfs]$ $HADOOP_PREFIX/sbin/hadoop-daemon.sh --script $HADOOP_PREFIX/bin/hdfs stop dfsrouter

The mount table entries are pretty much the same as in ViewFs. A good practice for simplifying the management is to name the federated namespace with the same names as the destination namespaces. For example, if we to mount /data/app1 in the federated namespace, it is recommended to have that same name as in the destination namespace.

The federation admin tool supports managing the mount table. For example, to create three mount points and list them:

[hdfs]$ $HADOOP_HOME/bin/hdfs dfsrouteradmin -add /tmp ns1 /tmp [hdfs]$ $HADOOP_HOME/bin/hdfs dfsrouteradmin -add /data/app1 ns2 /data/app1 [hdfs]$ $HADOOP_HOME/bin/hdfs dfsrouteradmin -add /data/app2 ns3 /data/app2 [hdfs]$ $HADOOP_HOME/bin/hdfs dfsrouteradmin -ls

If a mount point is not set, the Router will map it to the default namespace dfs.federation.router.default.nameserviceId.

For clients to use the federated namespace, they need to create a new one that points to the routers. For example, a cluster with 4 namespaces ns0, ns1, ns2, ns3, can add a new one to hdfs-site.xml called ns-fed which points to two of the routers:

<configuration>

<property>

<name>dfs.nameservices</name>

<value>ns0,ns1,ns2,ns3,ns-fed</value>

</property>

<property>

<name>dfs.namenodes.ns-fed</name>

<value>r1,r2</value>

</property>

<property>

<name>dfs.namenode.rpc-address.ns-fed.r1</name>

<value>router1:rpc-port</value>

</property>

<property>

<name>dfs.namenode.rpc-address.ns-fed.r2</name>

<value>router2:rpc-port</value>

</property>

<property>

<name>dfs.client.failover.proxy.provider.ns-fed</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<name>dfs.client.failover.random.order</name>

<value>true</value>

</property>

</configuration>

The dfs.client.failover.random.order set to true allows distributing the load evenly across the routers.

With this setting a user can interact with ns-fed as a regular namespace:

$ $HADOOP_HOME/bin/hdfs dfs -ls hdfs://ns-fed/ /tmp /data

This federated namespace can also be set as the default one at core-site.xml using fs.defaultFS.

One can add the configurations for Router-based federation to hdfs-site.xml. The main options are documented in hdfs-default.xml. The configuration values are described in this section.

The RPC server to receive connections from the clients.

| Property | Default | Description |

|---|---|---|

| dfs.federation.router.default.nameserviceId | Nameservice identifier of the default subcluster to monitor. | |

| dfs.federation.router.rpc.enable | true | If true, the RPC service to handle client requests in the router is enabled. |

| dfs.federation.router.rpc-address | 0.0.0.0:8888 | RPC address that handles all clients requests. |

| dfs.federation.router.rpc-bind-host | 0.0.0.0 | The actual address the RPC server will bind to. |

| dfs.federation.router.handler.count | 10 | The number of server threads for the router to handle RPC requests from clients. |

| dfs.federation.router.handler.queue.size | 100 | The size of the queue for the number of handlers to handle RPC client requests. |

| dfs.federation.router.reader.count | 1 | The number of readers for the router to handle RPC client requests. |

| dfs.federation.router.reader.queue.size | 100 | The size of the queue for the number of readers for the router to handle RPC client requests. |

The Router forwards the client requests to the NameNodes. It uses a pool of connections to reduce the latency of creating them.

| Property | Default | Description |

|---|---|---|

| dfs.federation.router.connection.pool-size | 1 | Size of the pool of connections from the router to namenodes. |

| dfs.federation.router.connection.clean.ms | 10000 | Time interval, in milliseconds, to check if the connection pool should remove unused connections. |

| dfs.federation.router.connection.pool.clean.ms | 60000 | Time interval, in milliseconds, to check if the connection manager should remove unused connection pools. |

The administration server to manage the Mount Table.

| Property | Default | Description |

|---|---|---|

| dfs.federation.router.admin.enable | true | If true, the RPC admin service to handle client requests in the router is enabled. |

| dfs.federation.router.admin-address | 0.0.0.0:8111 | RPC address that handles the admin requests. |

| dfs.federation.router.admin-bind-host | 0.0.0.0 | The actual address the RPC admin server will bind to. |

| dfs.federation.router.admin.handler.count | 1 | The number of server threads for the router to handle RPC requests from admin. |

The connection to the State Store and the internal caching at the Router.

| Property | Default | Description |

|---|---|---|

| dfs.federation.router.store.enable | true | If true, the Router connects to the State Store. |

| dfs.federation.router.store.serializer | StateStoreSerializerPBImpl | Class to serialize State Store records. |

| dfs.federation.router.store.driver.class | StateStoreZKImpl | Class to implement the State Store. |

| dfs.federation.router.store.connection.test | 60000 | How often to check for the connection to the State Store in milliseconds. |

| dfs.federation.router.cache.ttl | 60000 | How often to refresh the State Store caches in milliseconds. |

| dfs.federation.router.store.membership.expiration | 300000 | Expiration time in milliseconds for a membership record. |

Forwarding client requests to the right subcluster.

| Property | Default | Description |

|---|---|---|

| dfs.federation.router.file.resolver.client.class | MountTableResolver | Class to resolve files to subclusters. |

| dfs.federation.router.namenode.resolver.client.class | MembershipNamenodeResolver | Class to resolve the namenode for a subcluster. |

Monitor the namenodes in the subclusters for forwarding the client requests.

| Property | Default | Description |

|---|---|---|

| dfs.federation.router.heartbeat.enable | true | If true, the Router heartbeats into the State Store. |

| dfs.federation.router.heartbeat.interval | 5000 | How often the Router should heartbeat into the State Store in milliseconds. |

| dfs.federation.router.monitor.namenode | The identifier of the namenodes to monitor and heartbeat. | |

| dfs.federation.router.monitor.localnamenode.enable | true | If true, the Router should monitor the namenode in the local machine. |